---

base_model: unsloth/Qwen2.5-0.5B-Instruct

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2

license: apache-2.0

language:

- en

---

As calling operations scale, it becomes clear that dialing and talking is not enough.

Even with a strong voice AI + telephony architecture, the real value shows up only when post-call actions are captured and executed in a robust, dependable and consistent way. Closing the loop matters more than just connecting the call.

To support that, we’re releasing our Hindi + English transcript analytics model tuned specifically for call transcripts:

You can plug it into your calling or voice AI stack to automatically extract:

• Enum-based classifications (e.g., call outcome, intent, disposition)

• Conversation summaries

• Action items / follow-ups

It’s built to handle real-world Hindi, English, and mixed Hinglish calls, including noisy transcripts.

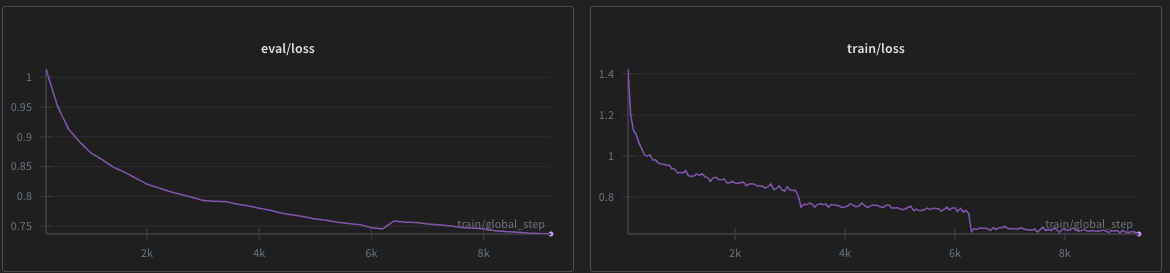

Finetuning Parameters:

```

rank = 64

lora_alpha = rank*2,

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

SFTConfig(

dataset_text_field = "prompt",

per_device_train_batch_size = 32,

gradient_accumulation_steps = 1, # Use GA to mimic batch size!

warmup_steps = 5,

num_train_epochs = 3,

learning_rate = 2e-4,

logging_steps = 50,

optim = "adamw_8bit",

weight_decay = 0.001,

lr_scheduler_type = "linear",

seed = SEED,

report_to = "wandb",

eval_strategy="steps",

eval_steps=200,

)

The model was finetuned on ~100,000 curated transcripts across different domanins and language preferences

```

Provide the below schema for best output:

```

response_schema = {

"type": "object",

"properties": {

"key_points": {

"type": "array",

"items": {"type": "string"},

"nullable": True,

},

"action_items": {

"type": "array",

"items": {"type": "string"},

"nullable": True,

},

"summary": {"type": "string"},

"classification": classification_schema,

},

"required": ["summary", "classification"],

}

```

- **Developed by:** RinggAI

- **License:** apache-2.0

- **Finetuned from model :** unsloth/Qwen2.5-0.5B-Instruct

- Parameter decision where made using

**Schulman, J., & Thinking Machines Lab. (2025).**

*LoRA Without Regret.*

Thinking Machines Lab: Connectionism.

DOI: 10.64434/tml.20250929

Link: https://thinkingmachines.ai/blog/lora/

[ ](https://ringg.ai)

[

](https://ringg.ai)

[ ](https://github.com/unslothai/unsloth)

](https://github.com/unslothai/unsloth)

](https://ringg.ai)

[

](https://ringg.ai)

[ ](https://github.com/unslothai/unsloth)

](https://github.com/unslothai/unsloth)